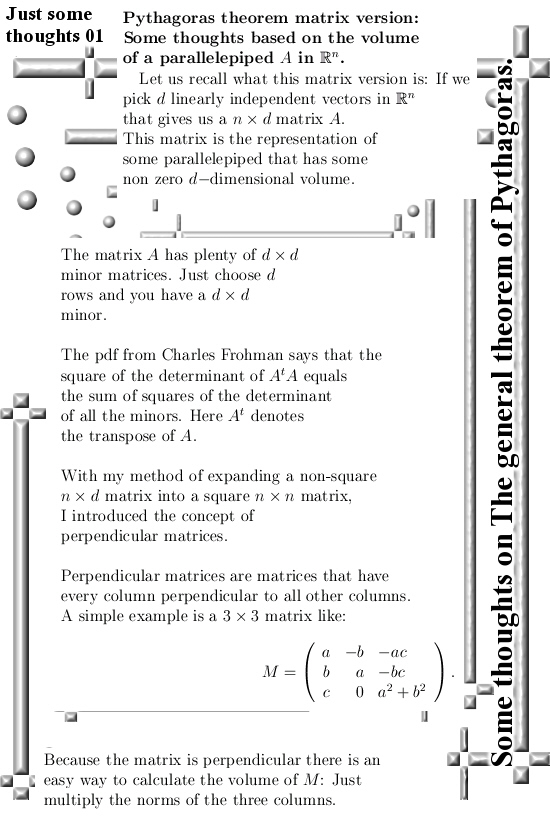

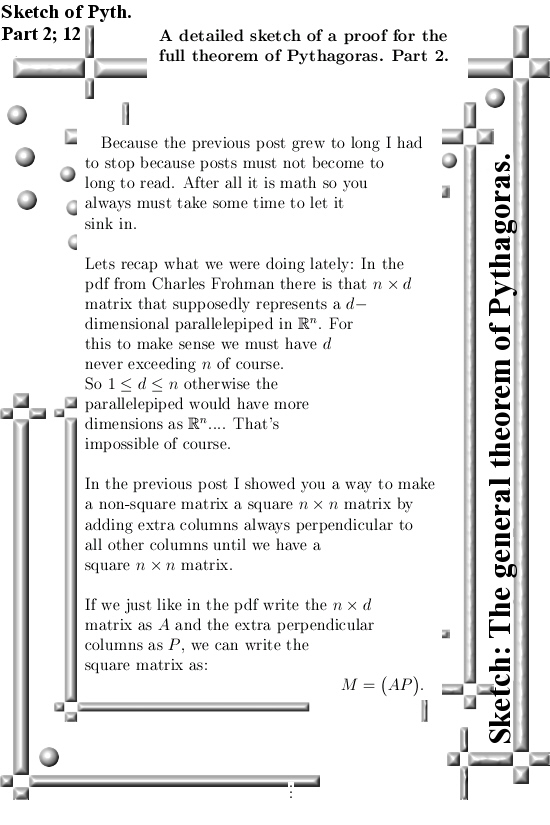

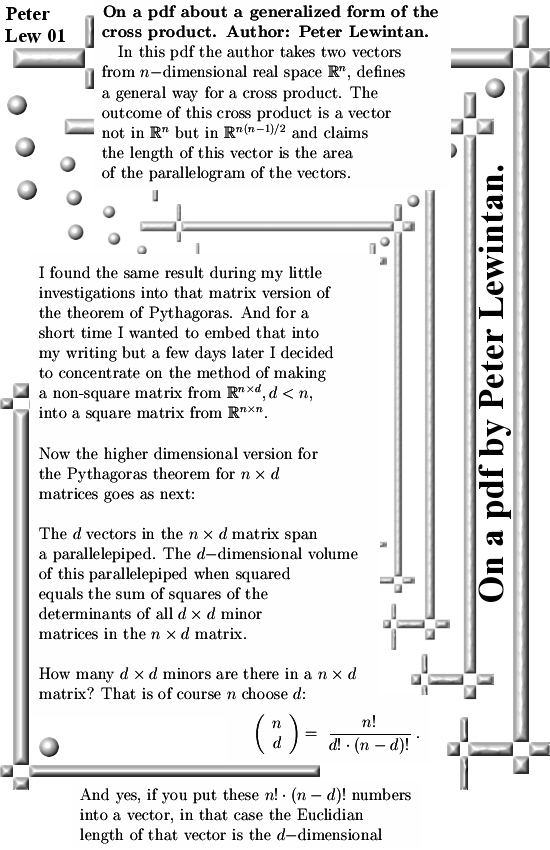

I have this pdf about a year now. Last year when I was doing that matrix form of the theorem of Pythagoras I came across this pdf and I saved it for another day.

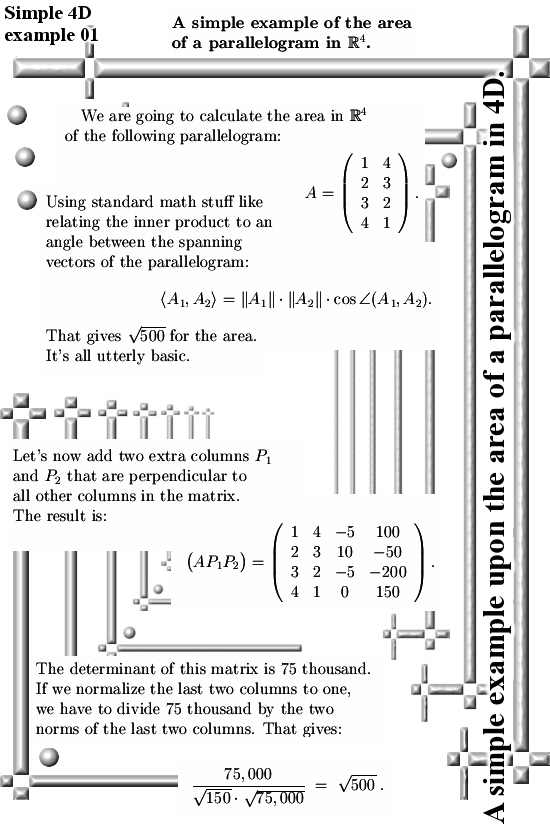

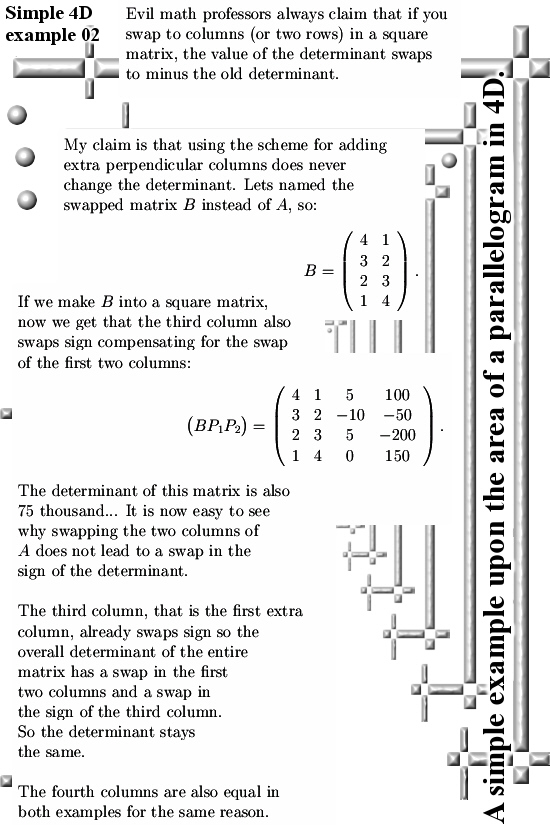

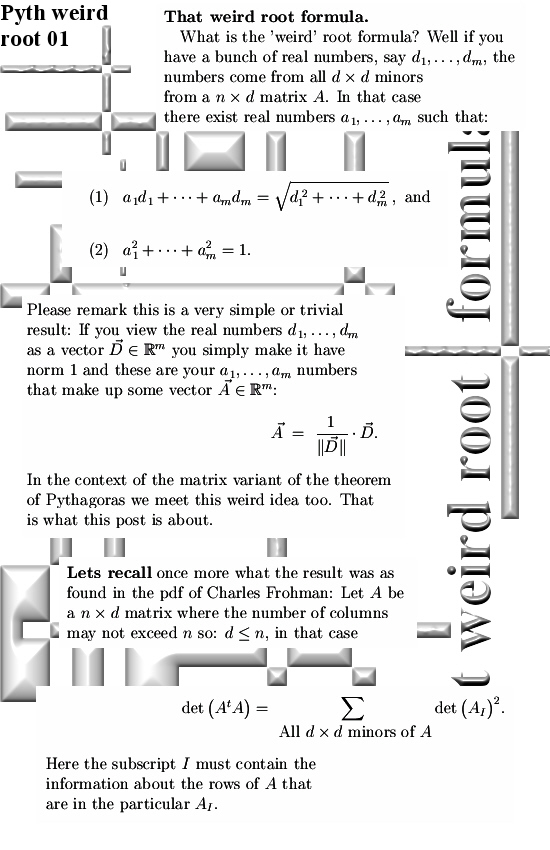

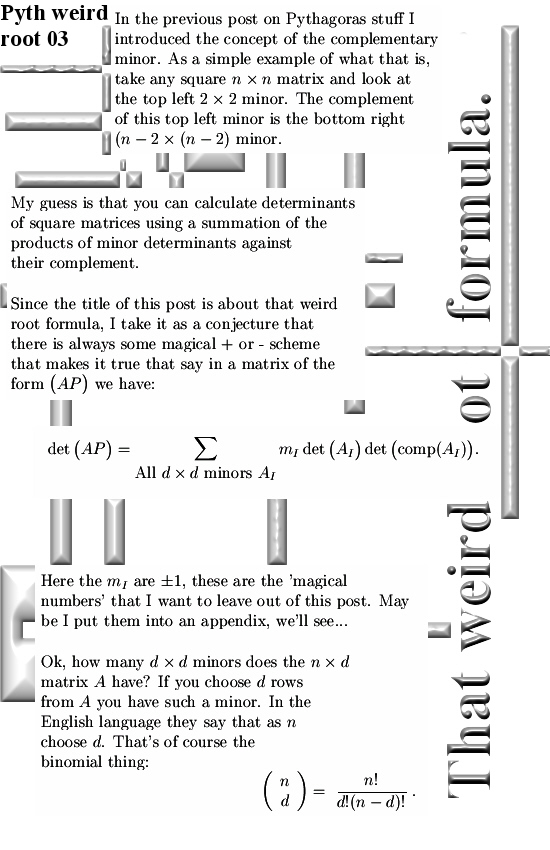

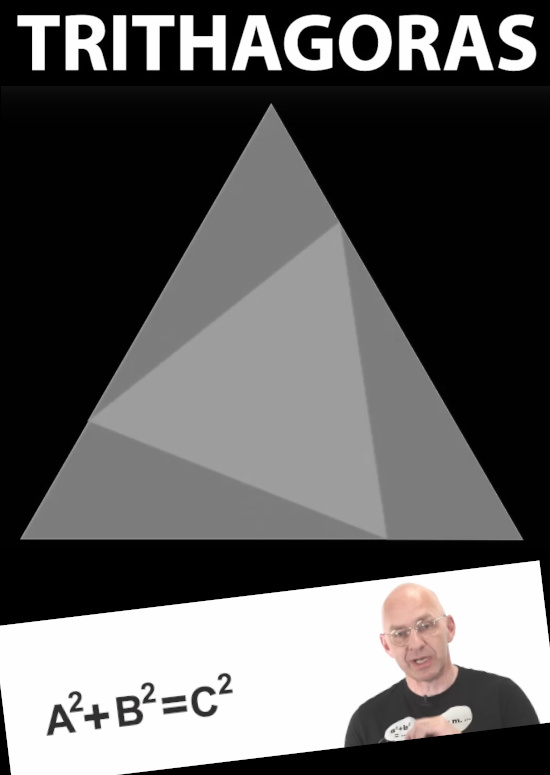

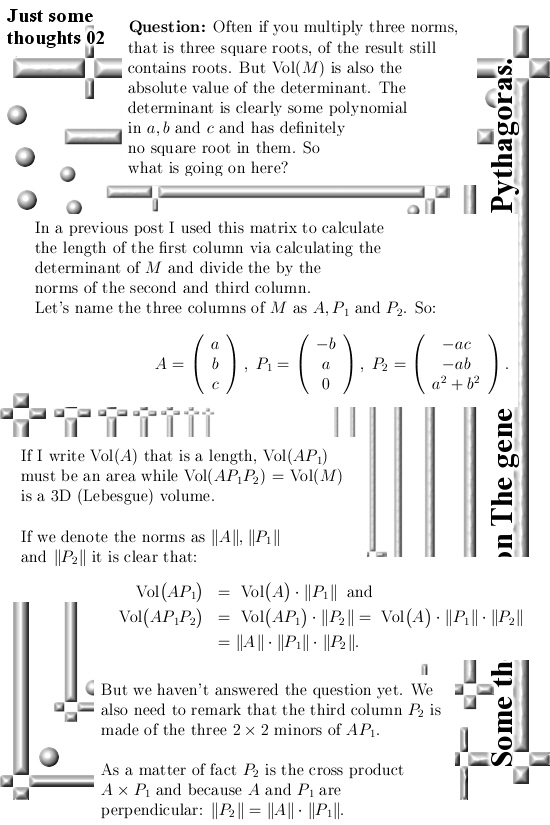

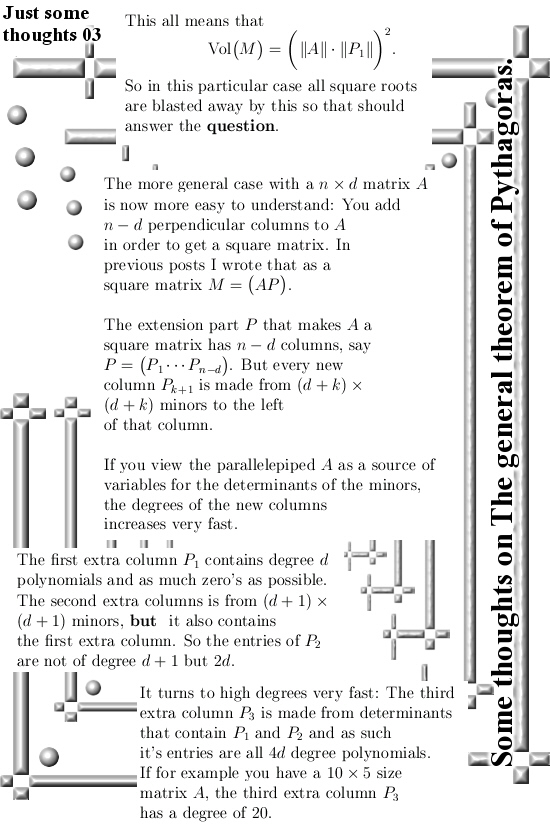

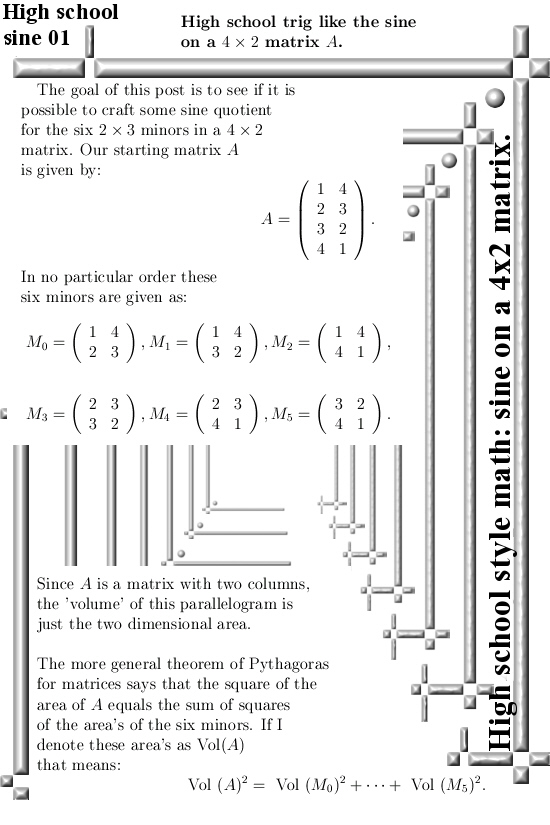

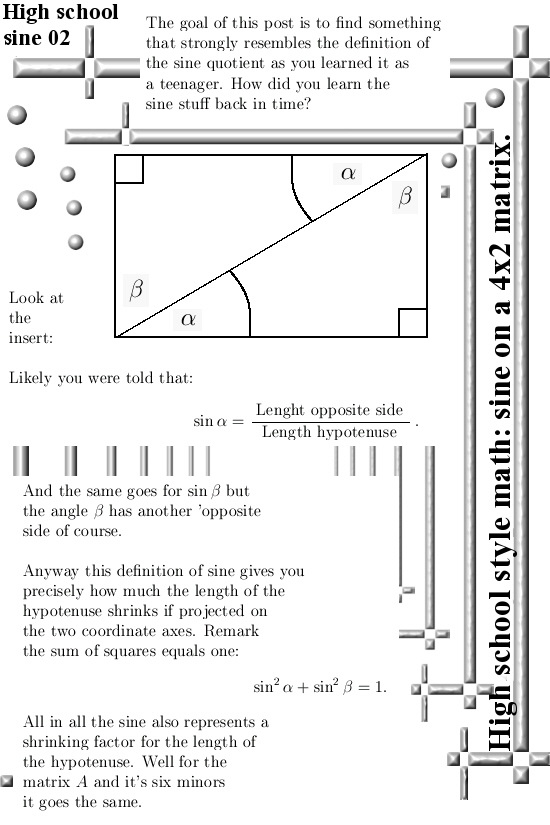

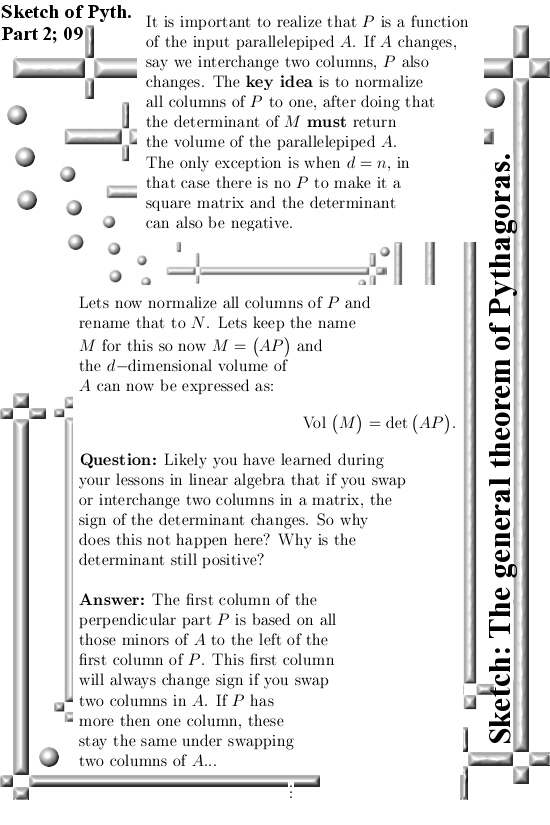

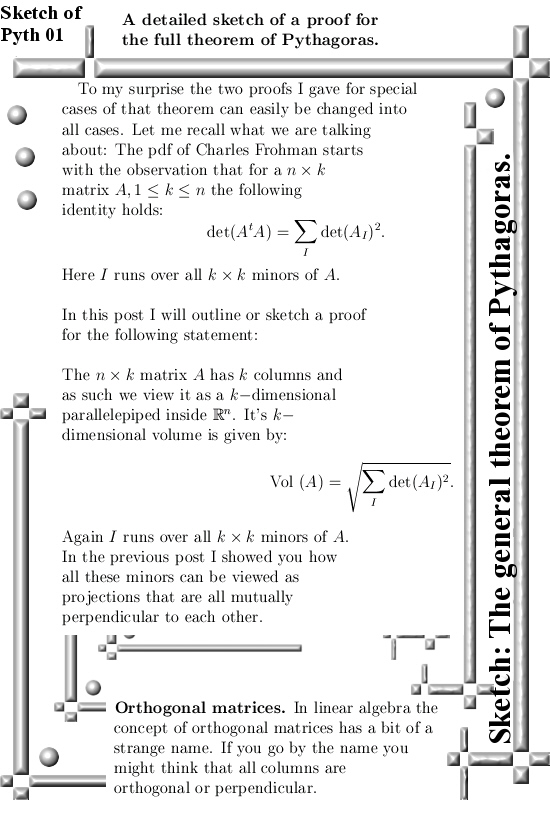

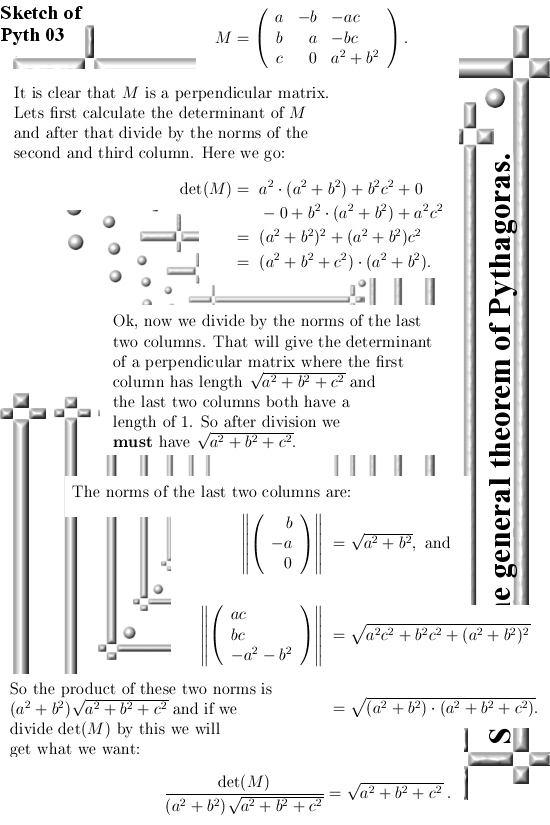

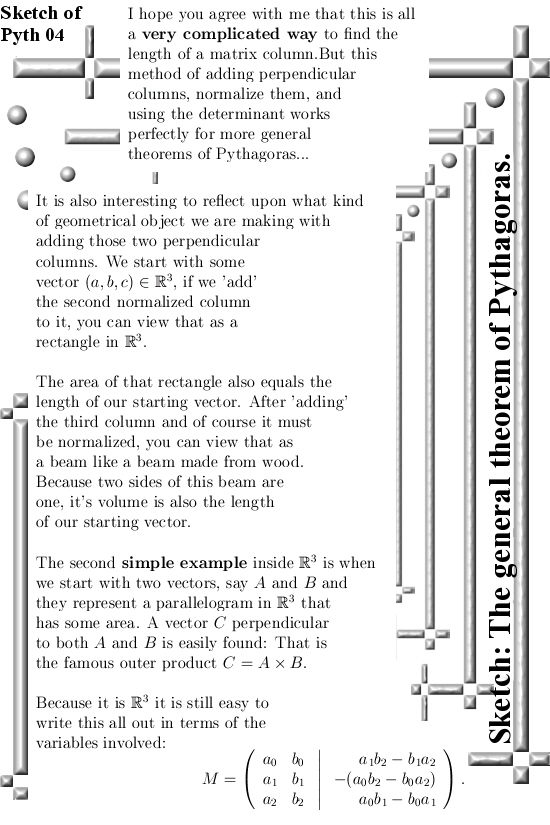

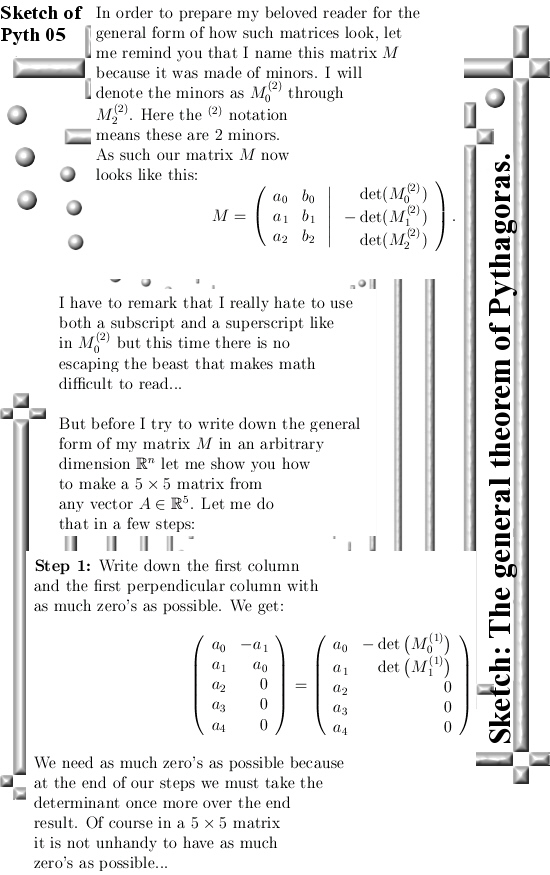

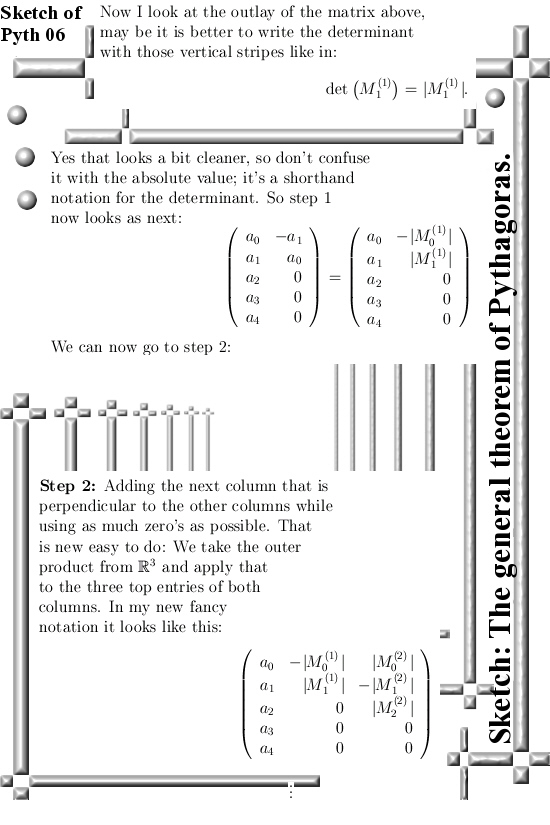

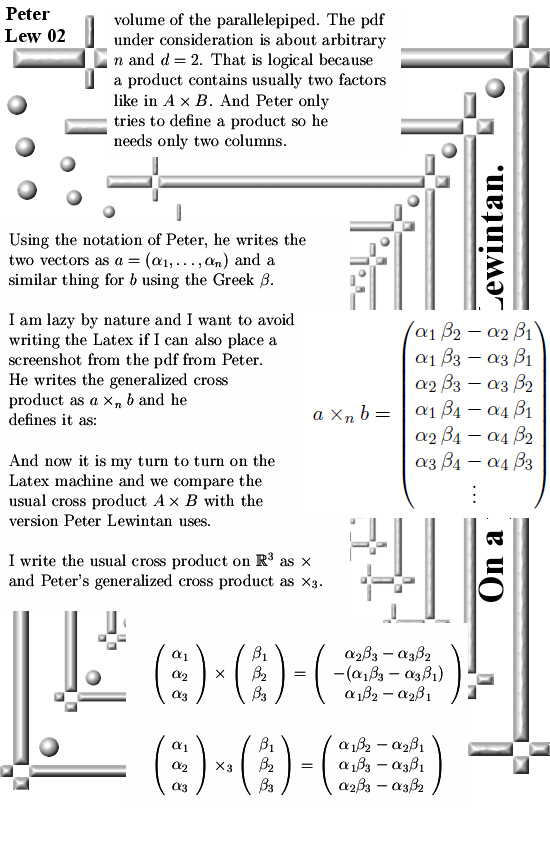

Last year when working on that matrix Pyth stuff you always get a large bunch of those determinants of those minor matrices. And I thought like “Hey you can put them in an array and in that case the length of that array is the volume of that parallelepipid”. Well in this pdf Peter is just doing that: He does not use a nxd matrix but only two columns because the goal is to make a product.

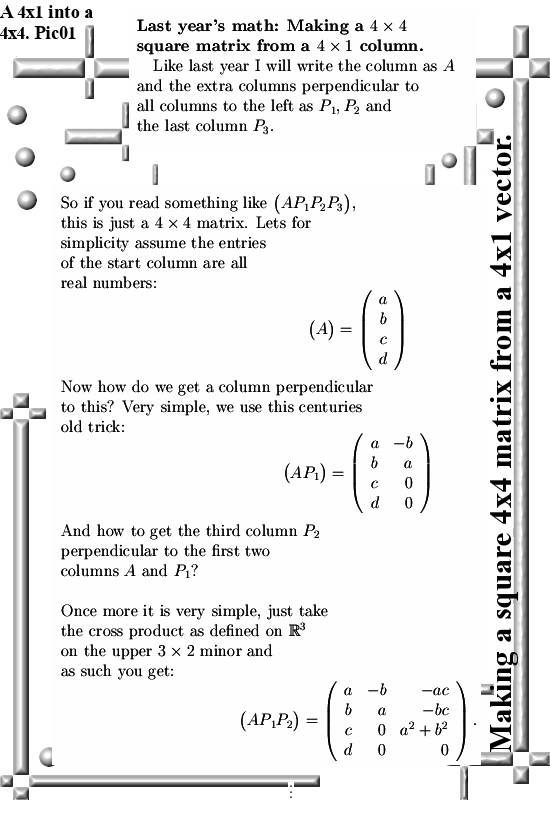

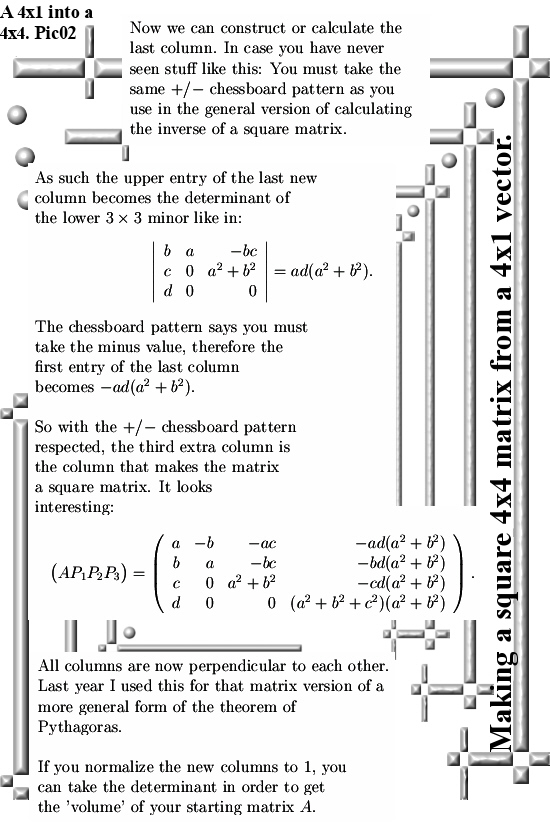

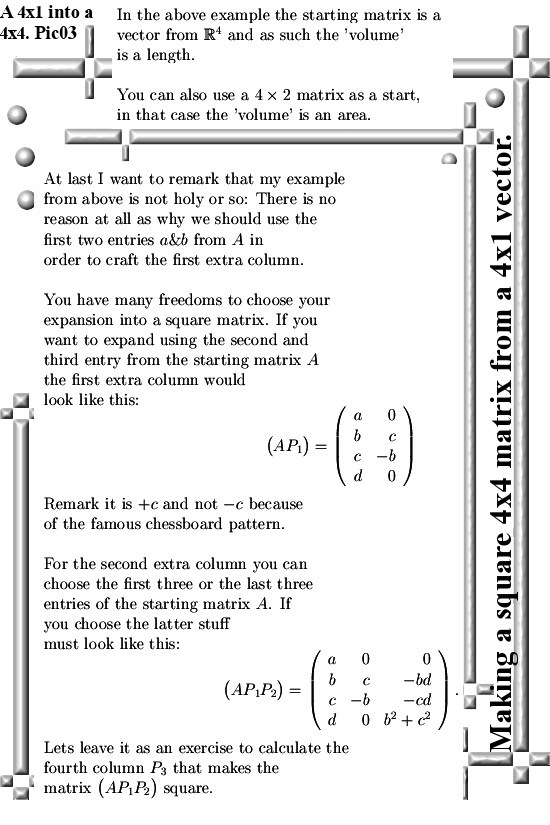

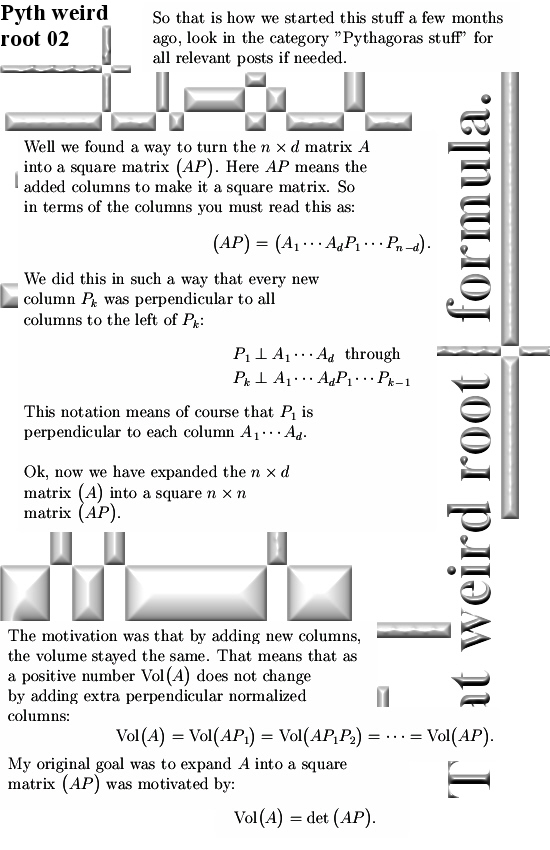

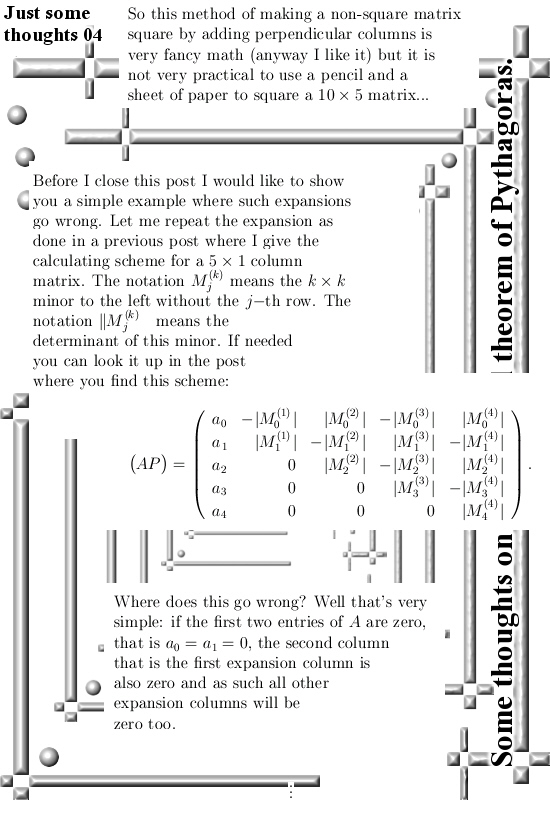

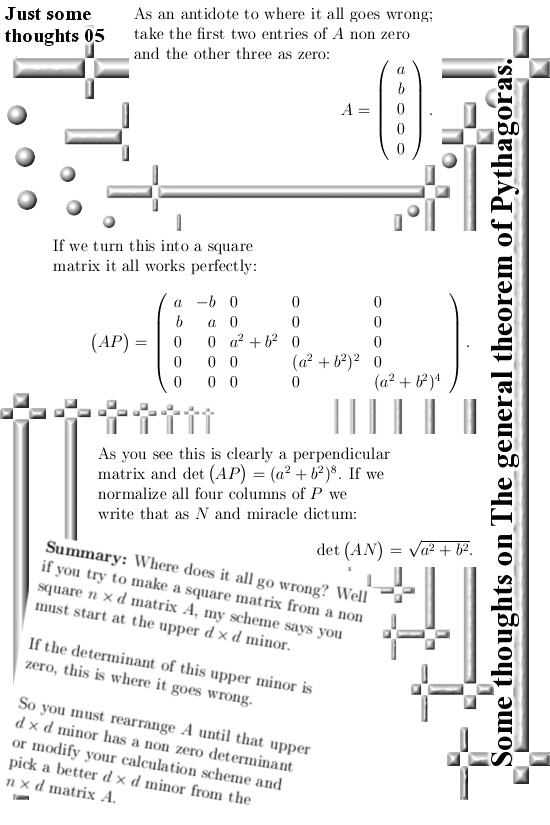

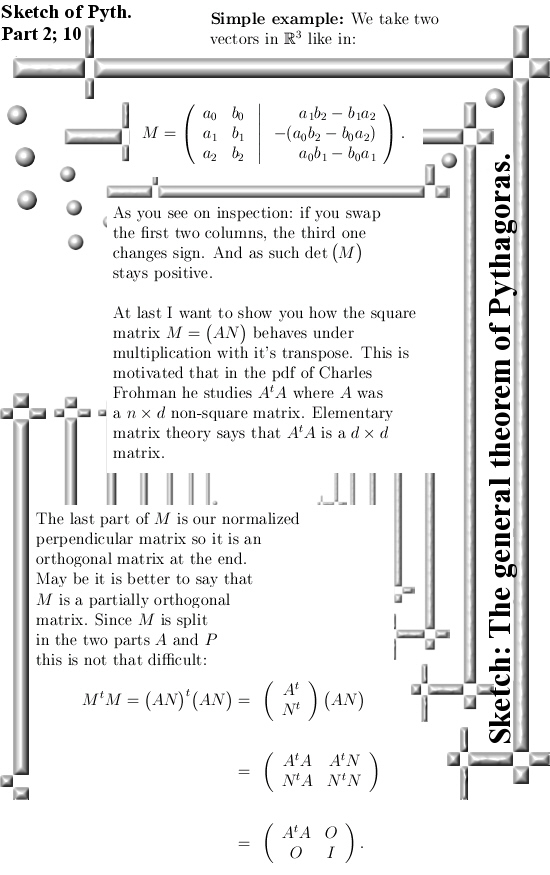

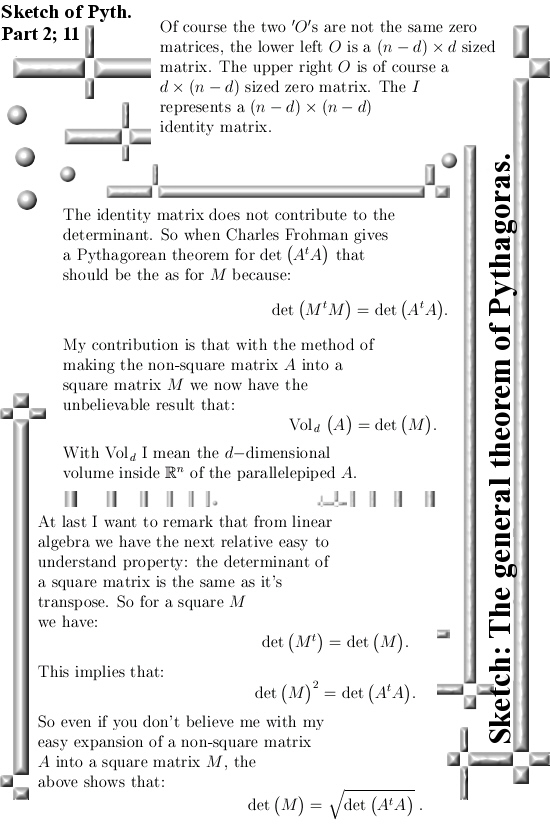

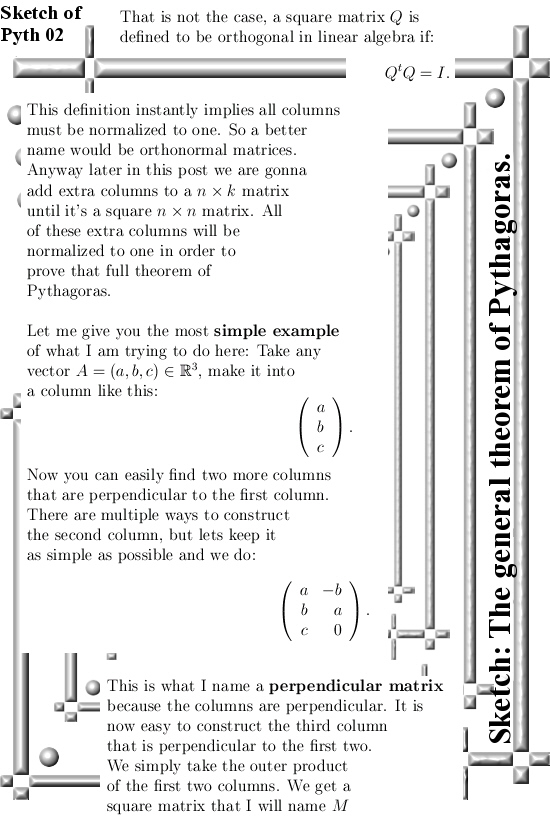

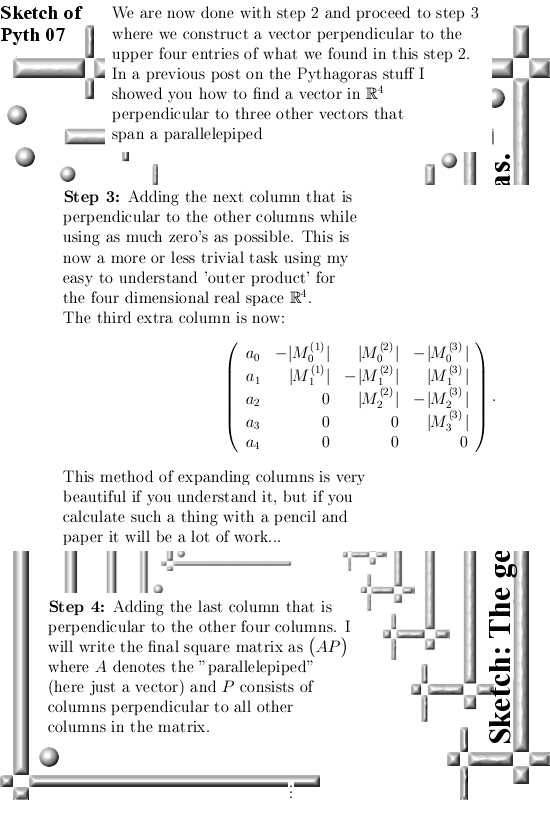

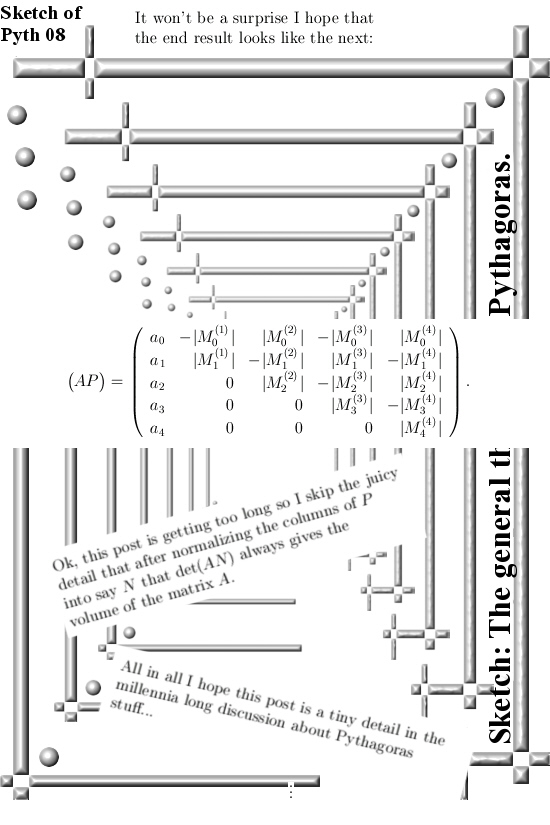

Last year after say an hour of thinking I decided to skip that line of math investigation and instead concentrate more on if it would be possible to make that none square nxd matrix into a square nxn matrix.

Another reson to save this pdf for a later day was the detail it was also about differential operators. Not that I am very deep into that but the curl is a beautiful operator and as you likely know can be expressed with the help of the usual cross product in three dimensions. To be honest I considered this part of the pdf a bit underwhelming. I really tried to make some edible cake from that form of differential operators but I could not give a meaning to it. May be I am wrong so if you want you can try for yourself.

The pdf contains much more as the above, a lot of cute old identies and also some rather technical math that I skipped because sometimes I am good at being lazy…;)

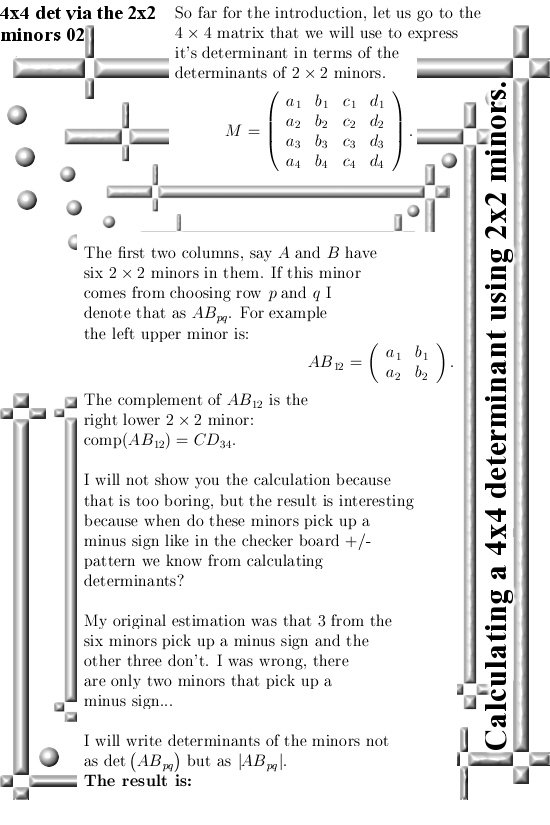

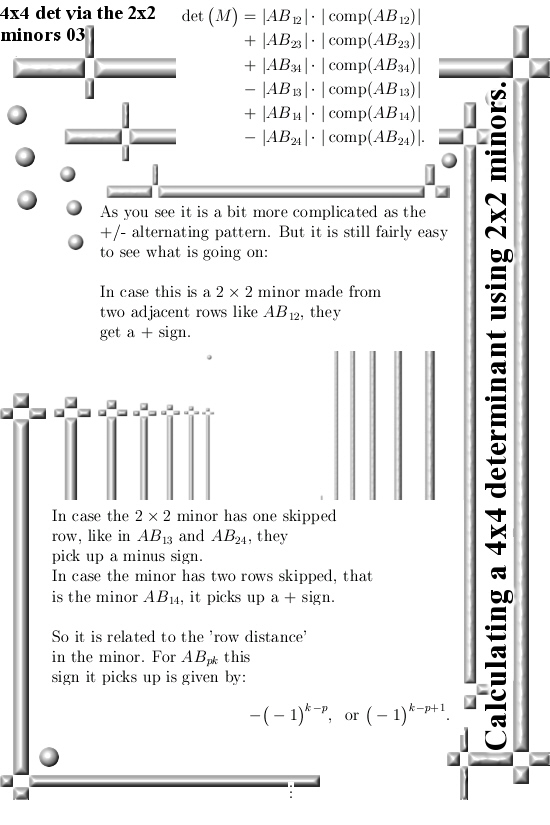

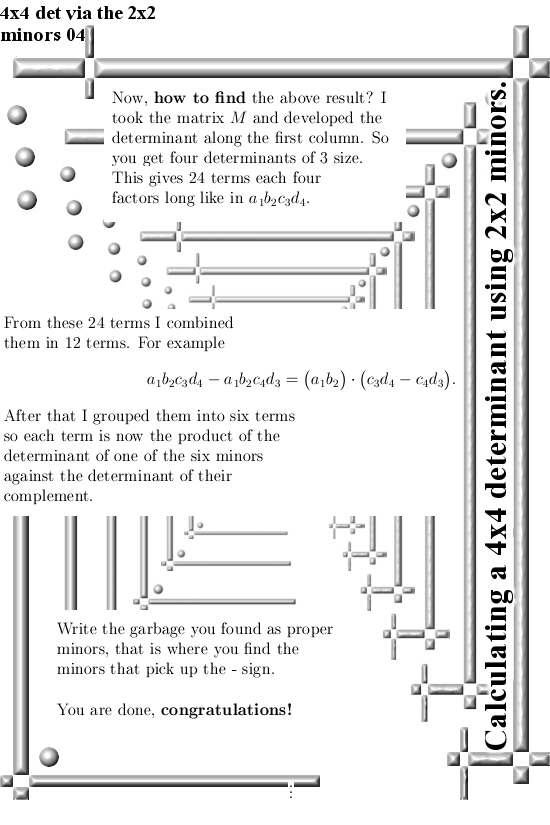

My comments are four pictures long and after that I will try to hand the dpf from Peter into it.

What is it that makes those present day picture generators so bad at things having five fingers? I think those genrative ai machines don’t count on their fingers.

But serious, here is the pdf:

I posted this in the category Pythagoras stuff because it is vaguely related to that matrix version of the Pythagoras theorem.

Ok that was it for the time being. May be the next post is from some nutty professor who claims in a video that 3D complex numbers don’t exist. Or something completely different. Who knows where our emotions bring us?